Privacy in machine learning models has become a serious concern due to membership inference attacks (MIAs). These attacks evaluate whether a particular data point is part of the model’s training data. Understanding MIA is critical because it assesses inadvertent information leakage when models are trained on diverse datasets. MIA spans a variety of scenarios, from statistical models to federated and privacy-preserving machine learning. Originally rooted in summary statistics, his MIA method has evolved using various hypothesis testing strategies and approximations, particularly in deep learning algorithms.

Traditional MIA approaches have faced major challenges. Despite improvements in attack effectiveness, the computational demands have made many privacy audits impractical. Some state-of-the-art methods, especially generalized models, risk falling into random guesses when computational resources are constrained. Moreover, the lack of a clear and interpretable means to compare different attacks gives rise to mutual advantages of each attack over the other based on different scenarios. This complexity requires the development of more robust and efficient attacks to effectively assess privacy risks. The computational cost associated with existing attacks limits their utility, highlighting the need for new strategies to achieve high performance within limited computational budgets.

In this context, a new paper has been published that proposes a new attack approach within the realm of Membership Inference Attacks (MIA). Membership inference attacks aim to identify whether a particular data point was exploited during the training of a particular machine learning model θ, and the It is depicted as an indistinguishable game. This includes scenarios where you train a model θ with or without data points x. The adversary’s task is to infer which scenario she is located within these two worlds based on her knowledge of x, the trained model θ, and the data distribution.

A new Membership Inference Attack (MIA) technique introduces a fine-grained approach to constructing two distinct worlds where x is either a member or a non-member of the training set. Unlike traditional techniques that simplify these constructions, this new attack meticulously constructs a null hypothesis by replacing x with a random data point from the population. This design results in many pairwise likelihood ratio tests to measure the membership of x with respect to other data points z. This attack aims to gather more substantial evidence supporting the existence of x than a random z in the training set, providing a more nuanced analysis of leakage. This new method computes likelihood ratios corresponding to x and z and distinguishes between scenarios in which x is a member and non-member through a likelihood ratio test.

The technique, named Relative Membership Inference Attack (RMIA), leverages population data and reference models to enhance the effectiveness and robustness of the attack against variations in the attacker’s background knowledge. This introduces a sophisticated likelihood ratio test that effectively measures the discriminability between x and any z based on the shift in probability conditional on θ. Unlike existing attacks, this method ensures a more tailored approach and avoids relying on uncalibrated scales or overlooking important adjustments due to population data. Through careful computation of pairwise likelihood ratios and a Bayesian approach, RMIA is realized as a robust, high-power, and cost-effective attack that outperforms traditional state-of-the-art techniques across a variety of scenarios.

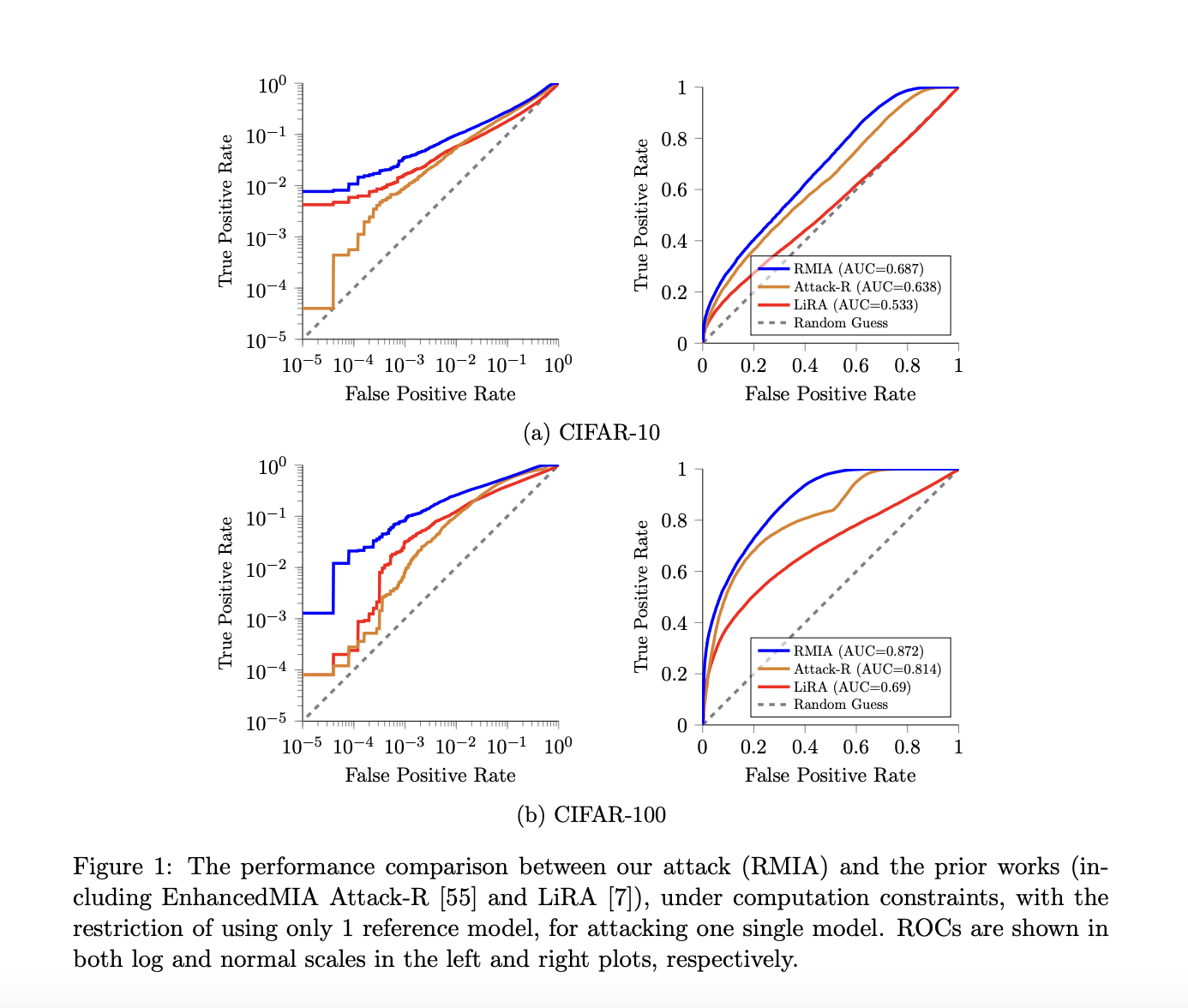

The authors compared RMIA to other membership inference attacks using datasets such as CIFAR-10, CIFAR-100, CINIC-10, and Purchase-100. RMIA consistently performed better than other attacks, especially in a limited number of reference models and offline scenarios. Even with fewer models, RMIA showed results close to scenarios with more models. Due to the rich reference models, RMIA maintained a slight advantage in AUC compared to LiRA and had significantly higher TPR at zero FPR. Performance improved as the number of queries increased, and its effectiveness was demonstrated across a variety of scenarios and datasets.

In conclusion, this article introduces RMIA, a relative membership inference attack technique, and demonstrates its superiority over existing attacks in determining membership in machine learning models. RMIA excels in scenarios with limited reference models and exhibits robust performance across a variety of datasets and model architectures. Moreover, this efficiency makes RMIA a practical and viable option for privacy risk analysis, especially in scenarios where resource constraints are a concern. RMIA’s flexibility, scalability, and balanced trade-off between accuracy and false positives position it as a reliable and adaptable method against membership inference attacks and privacy risk analysis tasks for machine learning models. offers promising applications.

Please check paper. All credit for this study goes to the researchers of this project.Also, don’t forget to join us 35,000+ ML SubReddits, 41,000+ Facebook communities, Discord channeland email newsletterWe share the latest AI research news, cool AI projects, and more.

If you like what we do, you’ll love our newsletter.

Mahmoud is a PhD researcher in machine learning. he also

Bachelor’s and Master’s degrees in Physical Sciences

Telecommunications and Network Systems.his current field

Research on computer vision, stock market prediction, and deep research

learn. He authored several scientific papers on the rediscovery of man.

Identification and study of robustness and stability of deep structures

network.

Pretty section of content. I just stumbled upon your web site

and in accession capital to assert that I acquire in fact enjoyed account your blog

posts. Any way I will be subscribing to your feeds and even I achievement

you access consistently fast.

Somebody necessarily lend a hand to make severely articles I would state.

This is the very first time I frequented your website page

and thus far? I surprised with the analysis you made to create this

actual publish extraordinary. Magnificent job!