In computer vision, the struggle to balance training efficiency and performance is becoming increasingly prominent. Traditional training methodologies often rely on vast datasets, placing a heavy burden on computational resources and creating a notable barrier for researchers with limited access to high-performance computing infrastructure. . The issue is that many existing solutions reduce the sample size for training while unintentionally introducing additional overhead or failing to maintain the model’s original performance level, negating the benefits of the implementation. This is further exacerbated by the fact that

At the heart of this challenge is the quest to optimize the training of deep learning models, a critical and resource-intensive task. The main hurdle is the computational demands of training extensive datasets without compromising model effectiveness. This has emerged as a critical concern in the field, where efficiency and performance need to coexist harmoniously to advance practical and accessible machine learning applications.

The existing solution landscape includes techniques such as dataset distillation and corset selection, both of which aim to reduce training sample size. Although these approaches are intuitively appealing, they introduce new complexities. For example, static pruning techniques that select samples based on specific metrics before training often incur additional computational costs and require assistance with generalization across different architectures or datasets. On the other hand, dynamic data pruning techniques aim to reduce training costs by reducing the number of iterations. However, these methods have limitations, mainly in achieving lossless results and operational efficiency.

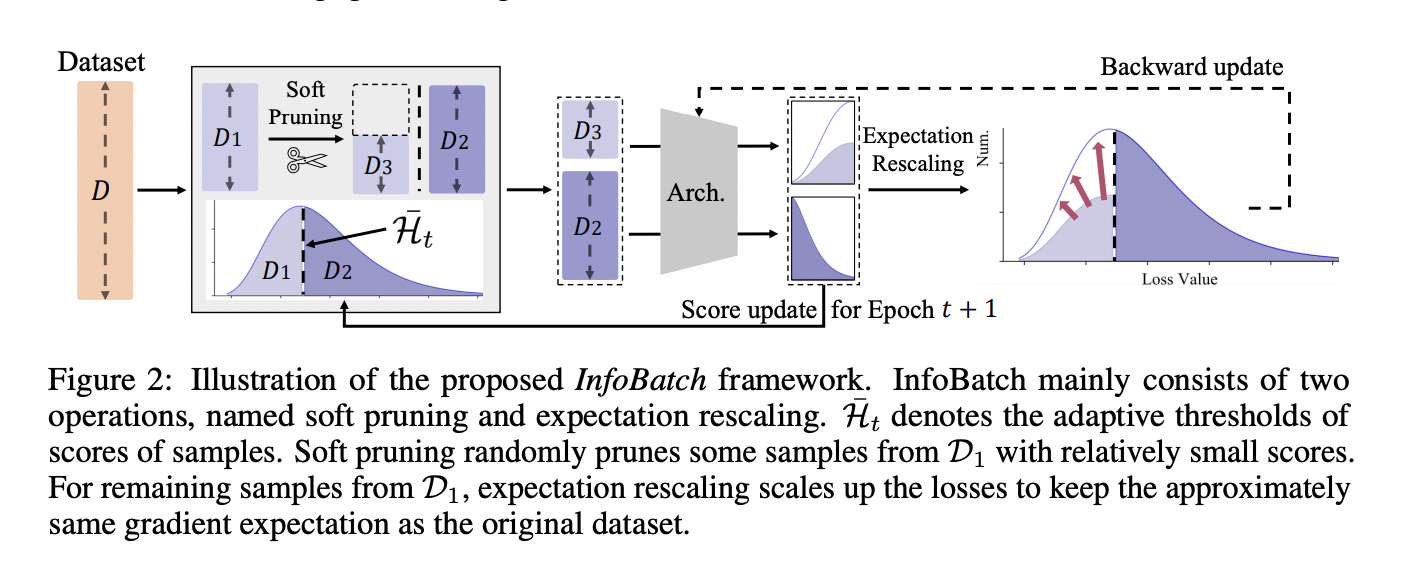

Researchers from the National University of Singapore and Alibaba Group have introduced InfoBatch, an innovative framework designed to accelerate training without sacrificing accuracy. InfoBatch distinguishes itself from previous methodologies with its dynamic approach to unbiased, adaptive data pruning. It maintains and dynamically updates a loss-based score for each data sample throughout the training process. The framework then selectively prunes uninformative samples identified by low scores and compensates for this pruning by scaling up the gradients of the remaining samples. This strategy effectively maintains gradient expectations similar to the original unpruned dataset, thus preserving model performance.

This framework has been demonstrated to significantly reduce computational overhead and outperform previous state-of-the-art methods by a factor of at least 10 times in efficiency. This efficiency gain does not come at the expense of performance. InfoBatch consistently achieves lossless training results across a variety of tasks, including classification, semantic segmentation, vision-related, and fine-tuning the instruction of language models. In practice, this leads to significant cost savings in computational resources and time. For example, InfoBatch has been shown to save up to 40% of overall costs when applied to datasets such as CIFAR10/100 and ImageNet1K. Even more impressive is the cost savings of 24.8%, rising to 27% for certain models such as the MAE and penetration models.

In summary, the key takeaways from the InfoBatch study are:

- InfoBatch introduces a new framework for unbiased dynamic data pruning that sets it apart from traditional static and dynamic pruning techniques.

- This framework significantly reduces computational overhead, making it practical for real-world applications, especially those with limited computational resources.

- Despite the increased efficiency, InfoBatch consistently achieves lossless training results across a variety of tasks.

- The versatility of this framework is demonstrated through its effective application in a variety of machine learning tasks, from classification to fine-tuning language model instructions.

- InfoBatch’s balance between efficiency and performance could have a significant impact on the future of machine learning training methodologies.

In conclusion, the development of InfoBatch represents a significant advance in machine learning and provides practical solutions to long-standing challenges in this field. InfoBatch represents a revolutionary advance in computational efficiency in machine learning by efficiently balancing training costs and model performance.

Please check paper. All credit for this study goes to the researchers of this project.Don’t forget to follow us twitter.participate 36,000+ ML SubReddits, 41,000+ Facebook communities, Discord channeland linkedin groupsHmm.

If you like what we do, you’ll love Newsletter..

Don’t forget to join us telegram channel

Hello, my name is Adnan Hassan. I’m a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at Indian Institute of Technology Kharagpur. I’m passionate about technology and want to create new products that make a difference.

This article offers clear idea for the new people of blogging, that

really how to do blogging.

It’s going to be ending of mine day, however before end I am reading this great paragraph

to improve my know-how.

Hi there, You’ve done a fantastic job. I’ll definitely digg it and personally suggest

to my friends. I am confident they will be benefited from this website.

Oh my goodness! Incredible article dude! Thanks, However

I am going through difficulties with your RSS. I don’t know why I can’t subscribe to

it. Is there anybody else having similar RSS issues?

Anyone that knows the answer will you kindly respond? Thanks!!

Hello would you mind stating which blog platform you’re using?

I’m planning to start my own blog soon but I’m having a tough time choosing between BlogEngine/Wordpress/B2evolution and Drupal.

The reason I ask is because your design and style seems different then most blogs and I’m looking for something

unique. P.S My apologies for being off-topic but I had to

ask!

My partner and I absolutely love your blog

and find the majority of your post’s to be just what I’m looking for.

Do you offer guest writers to write content for you?

I wouldn’t mind composing a post or elaborating on most of the subjects you write about here.

Again, awesome weblog!

You are so awesome! I don’t think I’ve read anything like

this before. So good to find someone with original thoughts on this subject matter.

Seriously.. thank you for starting this up. This site is one thing

that is required on the web, someone with a little originality!

La pasión que exhibes en tu blog es contagiosa y realmente inspira

a tus lectores a seguir aprendiendo contigo. ¡Un recurso valioso!

tijeras de podar jardineria

Everything is very open with a very clear description of the issues.

It was definitely informative. Your website is extremely

helpful. Thanks for sharing!

I’m pretty pleased to discover this website. I wanted to thank you for ones

time for this wonderful read!! I definitely savored every bit of it and i also have you book-marked to check out

new stuff on your web site.

First off I want to say fantastic blog! I had a quick

question that I’d like to ask if you do not mind. I was curious

to know how you center yourself and clear your head prior to writing.

I have had difficulty clearing my mind in getting my thoughts out there.

I truly do take pleasure in writing but it just seems like the first 10 to 15 minutes tend to be wasted simply just trying to figure out how to begin. Any recommendations or hints?

Cheers!

I visit daily a few blogs and information sites

to read articles, but this webpage presents

quality based posts.

penis enlargement

Wow that was odd. I just wrote an extremely long comment but

after I clicked submit my comment didn’t appear. Grrrr…

well I’m not writing all that over again. Anyways, just wanted to

say excellent blog!

Hmm is anyone else encountering problems with

the pictures on this blog loading? I’m trying to figure out if its a

problem on my end or if it’s the blog. Any feed-back would be greatly appreciated.

I am curious to find out what blog system you are utilizing?

I’m experiencing some minor security problems with my latest site and I’d like to

find something more safe. Do you have any recommendations?

Hi there! I understand this is sort of off-topic but I needed

to ask. Does managing a well-established blog such as yours

require a massive amount work? I am completely new to operating a blog but

I do write in my journal on a daily basis. I’d like to start a blog

so I will be able to share my personal experience and views online.

Please let me know if you have any suggestions or tips for new aspiring bloggers.

Thankyou!

Thanks for sharing your thoughts about sarang777 link alternatif.

Regards

Howdy! I could have sworn I’ve been to your blog before but

after looking at some of the articles I realized it’s new

to me. Nonetheless, I’m certainly happy I discovered

it and I’ll be book-marking it and checking back

regularly!

Hello! I understand this is somewhat off-topic however I had to ask.

Does managing a well-established blog such as yours require

a large amount of work? I am brand new to running a blog however I do write in my diary daily.

I’d like to start a blog so I can share my own experience and thoughts online.

Please let me know if you have any kind of recommendations or tips for brand new aspiring bloggers.

Thankyou!

Hello there! Would you mind if I share your blog with my myspace group?

There’s a lot of folks that I think would really

appreciate your content. Please let me know.

Thanks